rbm-ae-tf-master

所属分类:其他

开发工具:Python

文件大小:965KB

下载次数:8

上传日期:2018-01-27 11:41:06

上 传 者:

fengwushi

说明: 用TensorFlow实现自动编码技术。自动编码可以提取特征。用于分类和回归

(Automatically encode with TensorFlow. Automatic coding can extract features. Used for classification and regression)

文件列表:

LICENSE (1079, 2017-11-15)

au.py (4959, 2017-11-15)

input_data.py (7559, 2017-11-15)

rbm.py (7519, 2017-11-15)

test-ae-rbm.py (4382, 2017-11-15)

test-img (0, 2017-11-15)

test-img\1rbm.jpg (233529, 2017-11-15)

test-img\2rbm.jpg (183599, 2017-11-15)

test-img\3rbm.jpg (53408, 2017-11-15)

test-img\deepauto.png (299032, 2017-11-15)

test-img\pcafig.png (210828, 2017-11-15)

util.py (5097, 2017-11-15)

utilsnn.py (1487, 2017-11-15)

# rbm-ae-tf

Tensorflow implementation of Restricted Boltzman Machine and Autoencoder for layerwise pretraining of Deep Autoencoders with RBM. Idea is to first create RBMs for pretraining weights for autoencoder. Then weigts for autoencoder are loaded and autoencoder is trained again. In this implementation you can also use tied weights for autoencoder(that means that encoding and decoding layers have same transposed weights!).

I was inspired with these implementations but I need to refactor them and improve them. I tried to use also similar api as it is in [tensorflow/models](https://github.com/tensorflow/models):

> [myme5261314](https://gist.github.com/myme5261314/005ceac0483fc5a581cc)

> [saliksyed](https://gist.github.com/saliksyed/593c950ba1a3b9dd08d5)

> Thank you for your gists!

More about pretraining of weights in this paper:

##### [Reducing the Dimensionality of Data with Neural Networks](https://www.cs.toronto.edu/~hinton/science.pdf)

```python

from rbm import RBM

from au import AutoEncoder

import tensorflow as tf

import input_data

flags = tf.app.flags

FLAGS = flags.FLAGS

flags.DEFINE_string('data_dir', '/tmp/data/', 'Directory for storing data')

# First RBM

mnist = input_data.read_data_sets(FLAGS.data_dir, one_hot=True)

rbmobject1 = RBM(784, 100, ['rbmw1', 'rbvb1', 'rbmhb1'], 0.001)

# Second RBM

rbmobject2 = RBM(100, 20, ['rbmw2', 'rbvb2', 'rbmhb2'], 0.001)

# Autoencoder

autoencoder = AutoEncoder(784, [100, 20], [['rbmw1', 'rbmhb1'],

['rbmw2', 'rbmhb2']],

tied_weights=False)

# Train First RBM

for i in range(100):

batch_xs, batch_ys = mnist.train.next_batch(10)

cost = rbmobject1.partial_fit(batch_xs)

rbmobject1.save_weights('./rbmw1.chp')

# Train Second RBM

for i in range(100):

# Transform features with first rbm for second rbm

batch_xs, batch_ys = mnist.train.next_batch(10)

batch_xs = rbmobject1.transform(batch_xs)

cost = rbmobject2.partial_fit(batch_xs)

rbmobject2.save_weights('./rbmw2.chp')

# Load RBM weights to Autoencoder

autoencoder.load_rbm_weights('./rbmw1.chp', ['rbmw1', 'rbmhb1'], 0)

autoencoder.load_rbm_weights('./rbmw2.chp', ['rbmw2', 'rbmhb2'], 1)

# Train Autoencoder

for i in range(500):

batch_xs, batch_ys = mnist.train.next_batch(10)

cost = autoencoder.partial_fit(batch_xs)

autoencoder.save_weights('./au.chp')

autoencoder.load_weights('./au.chp')

```

Feel free to make updates, repairs. You can enhance implementation with some tips from:

> [Practical Guide to training RBM](https://www.cs.toronto.edu/~hinton/absps/guideTR.pdf)

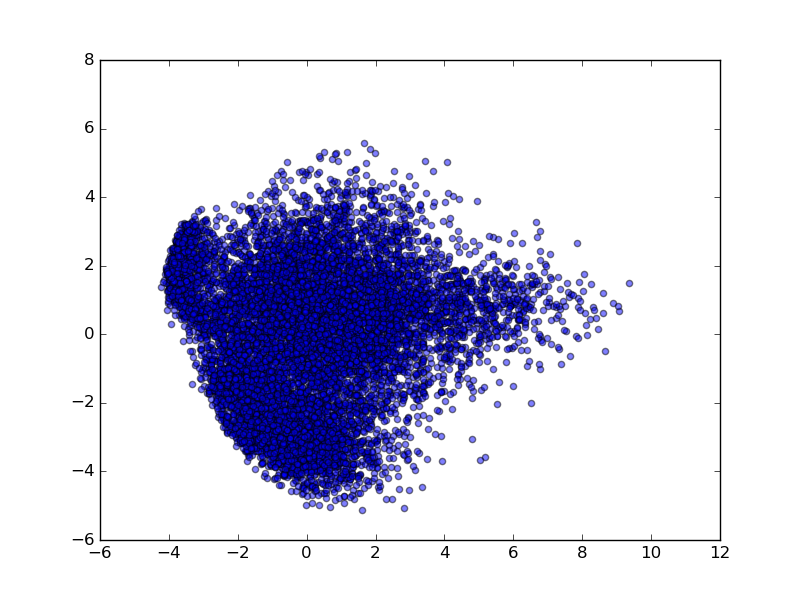

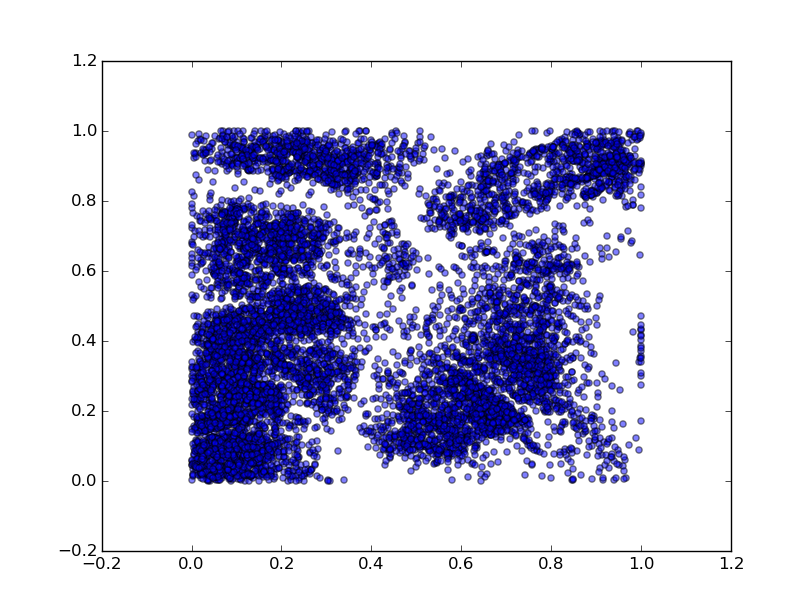

##### PCA vs DeepAutoencoder(RBM) on MNIST:

近期下载者:

相关文件:

收藏者: