kaldi-lstm-master

所属分类:文章/文档

开发工具:Perl

文件大小:1738KB

下载次数:3

上传日期:2019-03-18 12:19:00

上 传 者:

xxoospring

说明: lstm的声学模型,基于Kaldi的lstm模型设计

(lstm-based acoustic model design)

文件列表:

google (0, 2015-02-04)

google\cudamatrix (0, 2015-02-04)

google\cudamatrix\bd-cu-kernels-ansi.h (1163, 2015-02-04)

google\cudamatrix\bd-cu-kernels.cu (3411, 2015-02-04)

google\cudamatrix\bd-cu-kernels.h (1855, 2015-02-04)

google\cudamatrix\cu-matrix.cc (76244, 2015-02-04)

google\cudamatrix\cu-matrix.h (26562, 2015-02-04)

google\feature_transform.nnet.txt (860, 2015-02-04)

google\matrix (0, 2015-02-04)

google\matrix\kaldi-matrix.cc (97428, 2015-02-04)

google\matrix\kaldi-matrix.h (38426, 2015-02-04)

google\nnet.proto (317, 2015-02-04)

google\nnet (0, 2015-02-04)

google\nnet\bd-nnet-lstm-projected-streams.h (26554, 2015-02-04)

google\nnet\nnet-loss.cc (16195, 2015-02-04)

google\nnet\nnet-loss.h (3923, 2015-02-04)

google\nnet\nnet-nnet.h (5692, 2015-02-04)

google\nnetbin (0, 2015-02-04)

google\nnetbin\bd-nnet-train-lstm-streams.cc (11890, 2015-02-04)

google\papers (0, 2015-02-04)

google\papers\1990-williams-BPTT.pdf (213744, 2015-02-04)

google\papers\2014-icassp-google-LSTM-ASR.pdf (581489, 2015-02-04)

google\papers\2014-interspeech-google-LSTM-LVCSR.pdf (414109, 2015-02-04)

google\papers\2014-interspeech-google-LSTM-sequential-discriminative-LVCSR.pdf (285054, 2015-02-04)

google\papers\LSTM_dropout.pdf (264299, 2015-02-04)

google\train_lstm_streams.sh (2515, 2015-02-04)

misc (0, 2015-02-04)

misc\LSTM_DIAG_EQUATION.jpg (178982, 2015-02-04)

standard (0, 2015-02-04)

standard\nnet.proto (307, 2015-02-04)

standard\nnet (0, 2015-02-04)

standard\nnet\nnet-lstm-projected.h (26613, 2015-02-04)

standard\nnet\nnet-time-shift.h (1908, 2015-02-04)

standard\nnet\nnet-transmit-component.h (945, 2015-02-04)

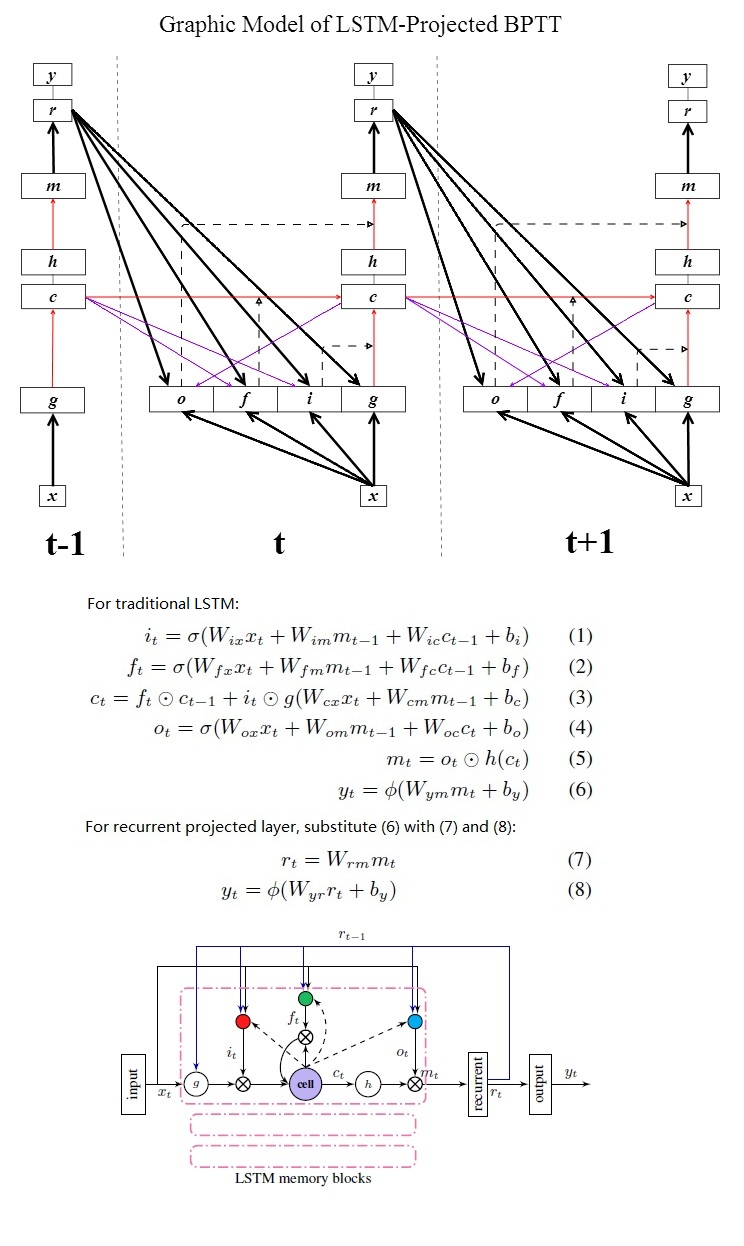

# LSTM-projected BPTT in Kaldi nnet1

## Diagram

## Notes:

* peephole connection(purple) are diagonal

* output-gate peephole is not recursive

* dashed arrows: adaptive weight, i.e activations of (input gate, forget gate, output gate)

Currently implementation includes two versions:

* standard

* google

Go to sub-directory to get more details.

# FAQ

## Q1. How to decode with LSTM?

* Standard version: exactly the same as DNN, feed LSTM nnet into nnet-forward as AM scorer, remember nnet-forward doesn't have a mechanism to delay target, so "time-shift" component is needed to do this.

* Google version:

- convert binary nnet into text format via nnet-copy, and open text nnet with your text editor

- change "Transmit" component to "TimeShift", keep your

setup consistent with "--targets-delay" used in nnet-train-lstm-streams

- edit "LstmProjectedStreams" to "LstmProjected", remove "NumStream" tag, now the "google version" is converted to "standard version", and you can perform AM scoring via nnet-forward, e.g:

```

40 40 5

512 40 800 [ ...

16624 512 1 1 0 [ ...

16624 16624

```

## Q2. How do I stack more than one layer of LSTM?

In google's paper, two layers of medium-sized LSTM is the best setup to beat DNN on WER. You can do this by text level editing:

* use some of your training data to train one layer LSTM nnet

* convert it into text format with nnet-copy with "--binary=false"

* insert a pre-initialized LSTM component text between softmax and your pretrained LSTM, and you can feed all your training data to the stacked LSTM, e.g:

```

40 40

512 40 800 4 [ ...

512 512 800 4 [ ...

16624 512 1 1 0 [ ...

16624 16624

```

## Q3. How do I know when to use "Transmit" or "TimeShift"?

The key is how you apply "target-delay".

* standard version: the nnet should be trained with "TimeShift" because default nnet1 training tool (nnet-train-frame-shuf & nnet-train-perutt) doesn't provide target delay.

* google version: due to the complexity of multi-stream training, the training tool "nnet-train-lstm-streams" provides an option "--target-delay", so in multi-stream training, a dummy "Transmit" component is used for a trivial reason related to how nnet1 calls Backpropagate(). But in testing time, the google version is first converted to standard version, so the "transmit" should also be switched to "TimeShift" during the conversion.

## Q4. Why are the "dropout" codes commented out?

I implemented the "forward-connection droping out" according another paper from google, but later I didn't implement dropout retention, so the effects of dropout are not tested at all, and I leave it commented out.

近期下载者:

相关文件:

收藏者: