Zhi-Tu-PyTorch-GAN-master

说明: a good and full code with the platform of pytorch with all kinds of GAN.

文件列表:

PyTorch-GAN (0, 2019-08-24)

PyTorch-GAN\LICENSE (1075, 2019-08-24)

PyTorch-GAN\assets (0, 2019-08-24)

PyTorch-GAN\assets\acgan.gif (1611811, 2019-08-24)

PyTorch-GAN\assets\bicyclegan.png (1042778, 2019-08-24)

PyTorch-GAN\assets\bicyclegan_architecture.jpg (126079, 2019-08-24)

PyTorch-GAN\assets\cgan.gif (2394580, 2019-08-24)

PyTorch-GAN\assets\cluster_gan.gif (7912889, 2019-08-24)

PyTorch-GAN\assets\cogan.gif (5188224, 2019-08-24)

PyTorch-GAN\assets\context_encoder.png (843326, 2019-08-24)

PyTorch-GAN\assets\cyclegan.png (1308368, 2019-08-24)

PyTorch-GAN\assets\dcgan.gif (760278, 2019-08-24)

PyTorch-GAN\assets\discogan.png (470871, 2019-08-24)

PyTorch-GAN\assets\enhanced_superresgan.png (499312, 2019-08-24)

PyTorch-GAN\assets\esrgan.png (551488, 2019-08-24)

PyTorch-GAN\assets\gan.gif (890597, 2019-08-24)

PyTorch-GAN\assets\infogan.gif (3544702, 2019-08-24)

PyTorch-GAN\assets\infogan.png (76559, 2019-08-24)

PyTorch-GAN\assets\logo.png (10112, 2019-08-24)

PyTorch-GAN\assets\munit.png (880599, 2019-08-24)

PyTorch-GAN\assets\pix2pix.png (347853, 2019-08-24)

PyTorch-GAN\assets\pixelda.png (26374, 2019-08-24)

PyTorch-GAN\assets\stargan.png (1702705, 2019-08-24)

PyTorch-GAN\assets\superresgan.png (601896, 2019-08-24)

PyTorch-GAN\assets\wgan_div.gif (305548, 2019-08-24)

PyTorch-GAN\assets\wgan_div.png (789642, 2019-08-24)

PyTorch-GAN\assets\wgan_gp.gif (401098, 2019-08-24)

PyTorch-GAN\data (0, 2019-08-24)

PyTorch-GAN\data\download_cyclegan_dataset.sh (1052, 2019-08-24)

PyTorch-GAN\data\download_pix2pix_dataset.sh (221, 2019-08-24)

PyTorch-GAN\implementations (0, 2019-08-24)

PyTorch-GAN\implementations\aae (0, 2019-08-24)

PyTorch-GAN\implementations\aae\aae.py (6615, 2019-08-24)

PyTorch-GAN\implementations\acgan (0, 2019-08-24)

PyTorch-GAN\implementations\acgan\acgan.py (8417, 2019-08-24)

PyTorch-GAN\implementations\began (0, 2019-08-24)

PyTorch-GAN\implementations\began\began.py (6812, 2019-08-24)

PyTorch-GAN\implementations\bgan (0, 2019-08-24)

... ...

PyTorch-GAN\LICENSE (1075, 2019-08-24)

PyTorch-GAN\assets (0, 2019-08-24)

PyTorch-GAN\assets\acgan.gif (1611811, 2019-08-24)

PyTorch-GAN\assets\bicyclegan.png (1042778, 2019-08-24)

PyTorch-GAN\assets\bicyclegan_architecture.jpg (126079, 2019-08-24)

PyTorch-GAN\assets\cgan.gif (2394580, 2019-08-24)

PyTorch-GAN\assets\cluster_gan.gif (7912889, 2019-08-24)

PyTorch-GAN\assets\cogan.gif (5188224, 2019-08-24)

PyTorch-GAN\assets\context_encoder.png (843326, 2019-08-24)

PyTorch-GAN\assets\cyclegan.png (1308368, 2019-08-24)

PyTorch-GAN\assets\dcgan.gif (760278, 2019-08-24)

PyTorch-GAN\assets\discogan.png (470871, 2019-08-24)

PyTorch-GAN\assets\enhanced_superresgan.png (499312, 2019-08-24)

PyTorch-GAN\assets\esrgan.png (551488, 2019-08-24)

PyTorch-GAN\assets\gan.gif (890597, 2019-08-24)

PyTorch-GAN\assets\infogan.gif (3544702, 2019-08-24)

PyTorch-GAN\assets\infogan.png (76559, 2019-08-24)

PyTorch-GAN\assets\logo.png (10112, 2019-08-24)

PyTorch-GAN\assets\munit.png (880599, 2019-08-24)

PyTorch-GAN\assets\pix2pix.png (347853, 2019-08-24)

PyTorch-GAN\assets\pixelda.png (26374, 2019-08-24)

PyTorch-GAN\assets\stargan.png (1702705, 2019-08-24)

PyTorch-GAN\assets\superresgan.png (601896, 2019-08-24)

PyTorch-GAN\assets\wgan_div.gif (305548, 2019-08-24)

PyTorch-GAN\assets\wgan_div.png (789642, 2019-08-24)

PyTorch-GAN\assets\wgan_gp.gif (401098, 2019-08-24)

PyTorch-GAN\data (0, 2019-08-24)

PyTorch-GAN\data\download_cyclegan_dataset.sh (1052, 2019-08-24)

PyTorch-GAN\data\download_pix2pix_dataset.sh (221, 2019-08-24)

PyTorch-GAN\implementations (0, 2019-08-24)

PyTorch-GAN\implementations\aae (0, 2019-08-24)

PyTorch-GAN\implementations\aae\aae.py (6615, 2019-08-24)

PyTorch-GAN\implementations\acgan (0, 2019-08-24)

PyTorch-GAN\implementations\acgan\acgan.py (8417, 2019-08-24)

PyTorch-GAN\implementations\began (0, 2019-08-24)

PyTorch-GAN\implementations\began\began.py (6812, 2019-08-24)

PyTorch-GAN\implementations\bgan (0, 2019-08-24)

... ...

Various style translations by varying the latent code.

### Boundary-Seeking GAN _Boundary-Seeking Generative Adversarial Networks_ #### Authors R Devon Hjelm, Athul Paul Jacob, Tong Che, Adam Trischler, Kyunghyun Cho, Yoshua Bengio #### Abstract Generative adversarial networks (GANs) are a learning framework that rely on training a discriminator to estimate a measure of difference between a target and generated distributions. GANs, as normally formulated, rely on the generated samples being completely differentiable w.r.t. the generative parameters, and thus do not work for discrete data. We introduce a method for training GANs with discrete data that uses the estimated difference measure from the discriminator to compute importance weights for generated samples, thus providing a policy gradient for training the generator. The importance weights have a strong connection to the decision boundary of the discriminator, and we call our method boundary-seeking GANs (BGANs). We demonstrate the effectiveness of the proposed algorithm with discrete image and character-based natural language generation. In addition, the boundary-seeking objective extends to continuous data, which can be used to improve stability of training, and we demonstrate this on Celeba, Large-scale Scene Understanding (LSUN) bedrooms, and Imagenet without conditioning. [[Paper]](https://arxiv.org/abs/1702.08431) [[Code]](implementations/bgan/bgan.py) #### Run Example ``` $ cd implementations/bgan/ $ python3 bgan.py ``` ### Cluster GAN _ClusterGAN: Latent Space Clustering in Generative Adversarial Networks_ #### Authors Sudipto Mukherjee, Himanshu Asnani, Eugene Lin, Sreeram Kannan #### Abstract Generative Adversarial networks (GANs) have obtained remarkable success in many unsupervised learning tasks and unarguably, clustering is an important unsupervised learning problem. While one can potentially exploit the latent-space back-projection in GANs to cluster, we demonstrate that the cluster structure is not retained in the GAN latent space. In this paper, we propose ClusterGAN as a new mechanism for clustering using GANs. By sampling latent variables from a mixture of one-hot encoded variables and continuous latent variables, coupled with an inverse network (which projects the data to the latent space) trained jointly with a clustering specific loss, we are able to achieve clustering in the latent space. Our results show a remarkable phenomenon that GANs can preserve latent space interpolation across categories, even though the discriminator is never exposed to such vectors. We compare our results with various clustering baselines and demonstrate superior performance on both synthetic and real datasets. [[Paper]](https://arxiv.org/abs/1809.03627) [[Code]](implementations/cluster_gan/clustergan.py) Code based on a full PyTorch [[implementation]](https://github.com/zhampel/clusterGAN). #### Run Example ``` $ cd implementations/cluster_gan/ $ python3 clustergan.py ```

Rows: Masked | Inpainted | Original | Masked | Inpainted | Original

### Coupled GAN _Coupled Generative Adversarial Networks_ #### Authors Ming-Yu Liu, Oncel Tuzel #### Abstract We propose coupled generative adversarial network (CoGAN) for learning a joint distribution of multi-domain images. In contrast to the existing approaches, which require tuples of corresponding images in different domains in the training set, CoGAN can learn a joint distribution without any tuple of corresponding images. It can learn a joint distribution with just samples drawn from the marginal distributions. This is achieved by enforcing a weight-sharing constraint that limits the network capacity and favors a joint distribution solution over a product of marginal distributions one. We apply CoGAN to several joint distribution learning tasks, including learning a joint distribution of color and depth images, and learning a joint distribution of face images with different attributes. For each task it successfully learns the joint distribution without any tuple of corresponding images. We also demonstrate its applications to domain adaptation and image transformation. [[Paper]](https://arxiv.org/abs/1606.07536) [[Code]](implementations/cogan/cogan.py) #### Run Example ``` $ cd implementations/cogan/ $ python3 cogan.py ```

Generated MNIST and MNIST-M images

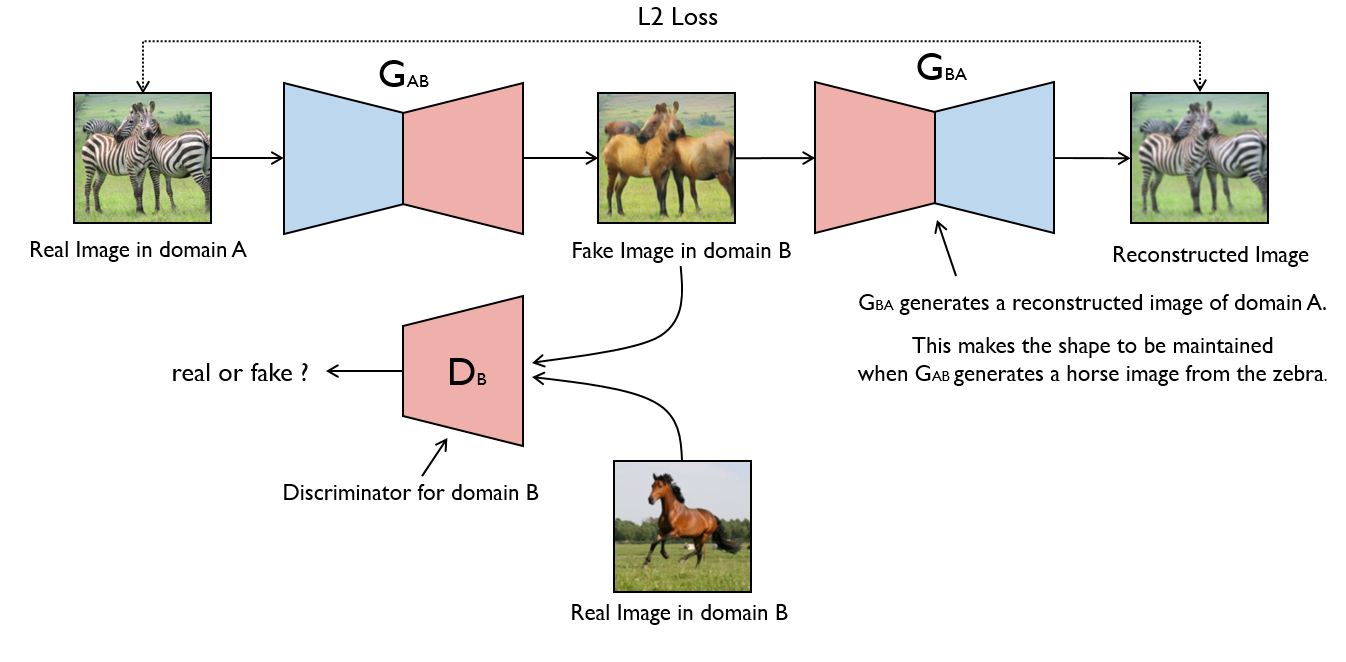

### CycleGAN _Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks_ #### Authors Jun-Yan Zhu, Taesung Park, Phillip Isola, Alexei A. Efros #### Abstract Image-to-image translation is a class of vision and graphics problems where the goal is to learn the mapping between an input image and an output image using a training set of aligned image pairs. However, for many tasks, paired training data will not be available. We present an approach for learning to translate an image from a source domain X to a target domain Y in the absence of paired examples. Our goal is to learn a mapping G:X→Y such that the distribution of images from G(X) is indistinguishable from the distribution Y using an adversarial loss. Because this mapping is highly under-constrained, we couple it with an inverse mapping F:Y→X and introduce a cycle consistency loss to push F(G(X))≈X (and vice versa). Qualitative results are presented on several tasks where paired training data does not exist, including collection style transfer, object transfiguration, season transfer, photo enhancement, etc. Quantitative comparisons against several prior methods demonstrate the superiority of our approach. [[Paper]](https://arxiv.org/abs/1703.10593) [[Code]](implementations/cyclegan/cyclegan.py)

Monet to photo translations.

### Deep Convolutional GAN _Deep Convolutional Generative Adversarial Network_ #### Authors Alec Radford, Luke Metz, Soumith Chintala #### Abstract In recent years, supervised learning with convolutional networks (CNNs) has seen huge adoption in computer vision applications. Comparatively, unsupervised learning with CNNs has received less attention. In this work we hope to help bridge the gap between the success of CNNs for supervised learning and unsupervised learning. We introduce a class of CNNs called deep convolutional generative adversarial networks (DCGANs), that have certain architectural constraints, and demonstrate that they are a strong candidate for unsupervised learning. Training on various image datasets, we show convincing evidence that our deep convolutional adversarial pair learns a hierarchy of representations from object parts to scenes in both the generator and discriminator. Additionally, we use the learned features for novel tasks - demonstrating their applicability as general image representations. [[Paper]](https://arxiv.org/abs/1511.0***34) [[Code]](implementations/dcgan/dcgan.py) #### Run Example ``` $ cd implementations/dcgan/ $ python3 dcgan.py ```

近期下载者:

相关文件:

收藏者: