SpiderKeeper

所属分类:数据采集/爬虫

开发工具:Python

文件大小:1850KB

下载次数:0

上传日期:2023-05-04 20:44:05

上 传 者:

sh-1993

说明: 废开源抓取中心的管理ui

(admin ui for scrapy open source scrapinghub)

文件列表:

CHANGELOG.md (817, 2018-05-29)

MANIFEST.in (90, 2018-05-29)

SpiderKeeper (0, 2018-05-29)

SpiderKeeper\__init__.py (46, 2018-05-29)

SpiderKeeper\app (0, 2018-05-29)

SpiderKeeper\app\__init__.py (3400, 2018-05-29)

SpiderKeeper\app\proxy (0, 2018-05-29)

SpiderKeeper\app\proxy\__init__.py (0, 2018-05-29)

SpiderKeeper\app\proxy\contrib (0, 2018-05-29)

SpiderKeeper\app\proxy\contrib\__init__.py (0, 2018-05-29)

SpiderKeeper\app\proxy\contrib\scrapy.py (3893, 2018-05-29)

SpiderKeeper\app\proxy\spiderctrl.py (7407, 2018-05-29)

SpiderKeeper\app\schedulers (0, 2018-05-29)

SpiderKeeper\app\schedulers\__init__.py (0, 2018-05-29)

SpiderKeeper\app\schedulers\common.py (3259, 2018-05-29)

SpiderKeeper\app\spider (0, 2018-05-29)

SpiderKeeper\app\spider\__init__.py (0, 2018-05-29)

SpiderKeeper\app\spider\controller.py (23859, 2018-05-29)

SpiderKeeper\app\spider\model.py (9509, 2018-05-29)

SpiderKeeper\app\static (0, 2018-05-29)

SpiderKeeper\app\static\css (0, 2018-05-29)

SpiderKeeper\app\static\css\AdminLTE.min.css (90391, 2018-05-29)

SpiderKeeper\app\static\css\alt (0, 2018-05-29)

SpiderKeeper\app\static\css\alt\AdminLTE-without-plugins.min.css (73645, 2018-05-29)

SpiderKeeper\app\static\css\app.css (218, 2018-05-29)

SpiderKeeper\app\static\css\bootstrap.min.css (121200, 2018-05-29)

SpiderKeeper\app\static\css\bootstrap.min.css.map (542194, 2018-05-29)

SpiderKeeper\app\static\css\font-awesome.min.css (31000, 2018-05-29)

SpiderKeeper\app\static\css\ionicons.min.css (51284, 2018-05-29)

SpiderKeeper\app\static\css\skins (0, 2018-05-29)

SpiderKeeper\app\static\css\skins\_all-skins.min.css (40757, 2018-05-29)

SpiderKeeper\app\static\css\skins\skin-black-light.min.css (4096, 2018-05-29)

SpiderKeeper\app\static\fonts (0, 2018-05-29)

SpiderKeeper\app\static\fonts\FontAwesome.otf (134808, 2018-05-29)

SpiderKeeper\app\static\fonts\fontawesome-webfont.eot (165742, 2018-05-29)

SpiderKeeper\app\static\fonts\fontawesome-webfont.svg (444379, 2018-05-29)

SpiderKeeper\app\static\fonts\fontawesome-webfont.ttf (165548, 2018-05-29)

... ...

# SpiderKeeper

[](https://pypi.python.org/pypi/SpiderKeeper)

[](https://pypi.python.org/pypi/SpiderKeeper)

[](https://github.com/DormyMo/SpiderKeeper/blob/master/LICENSE)

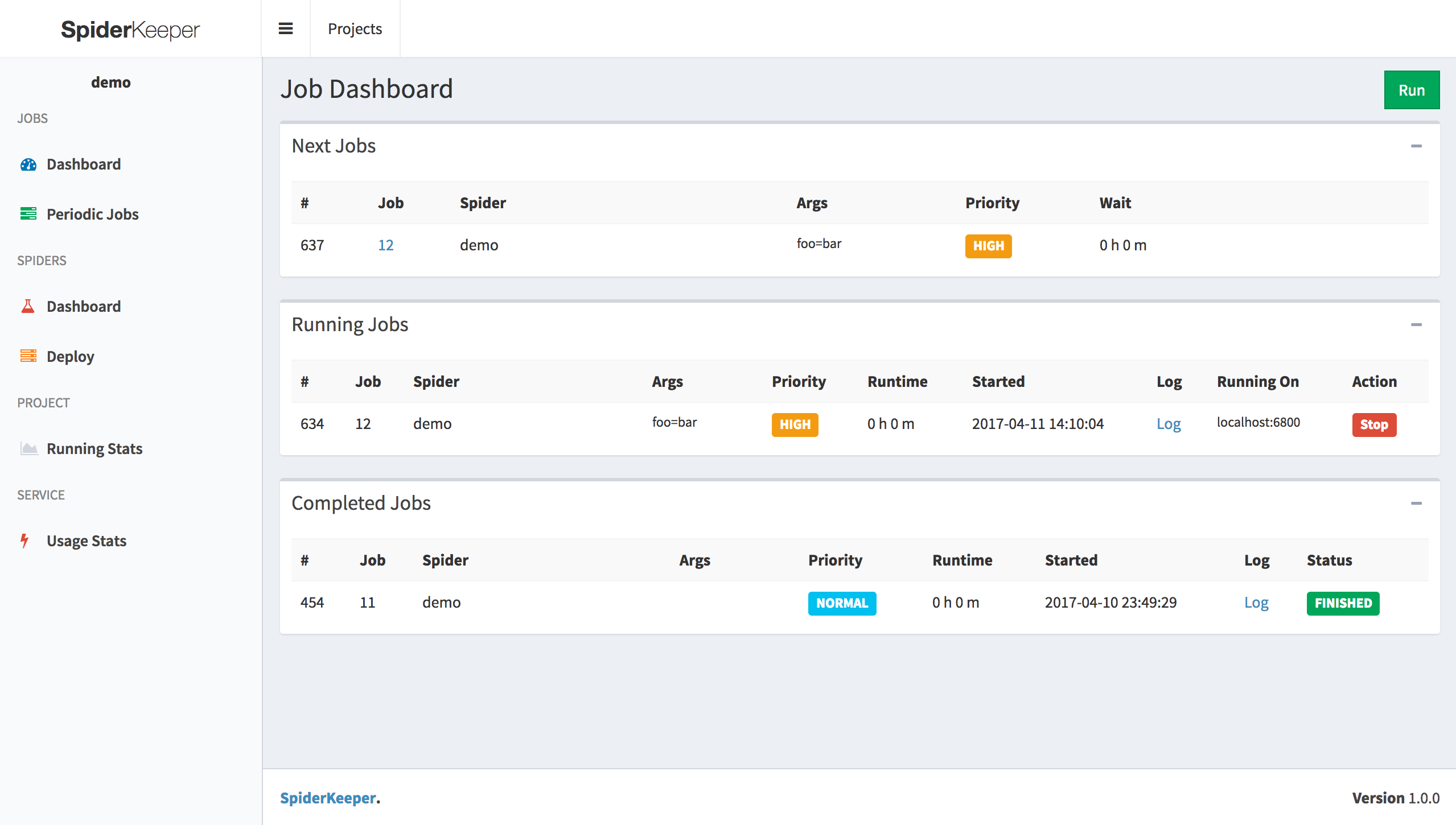

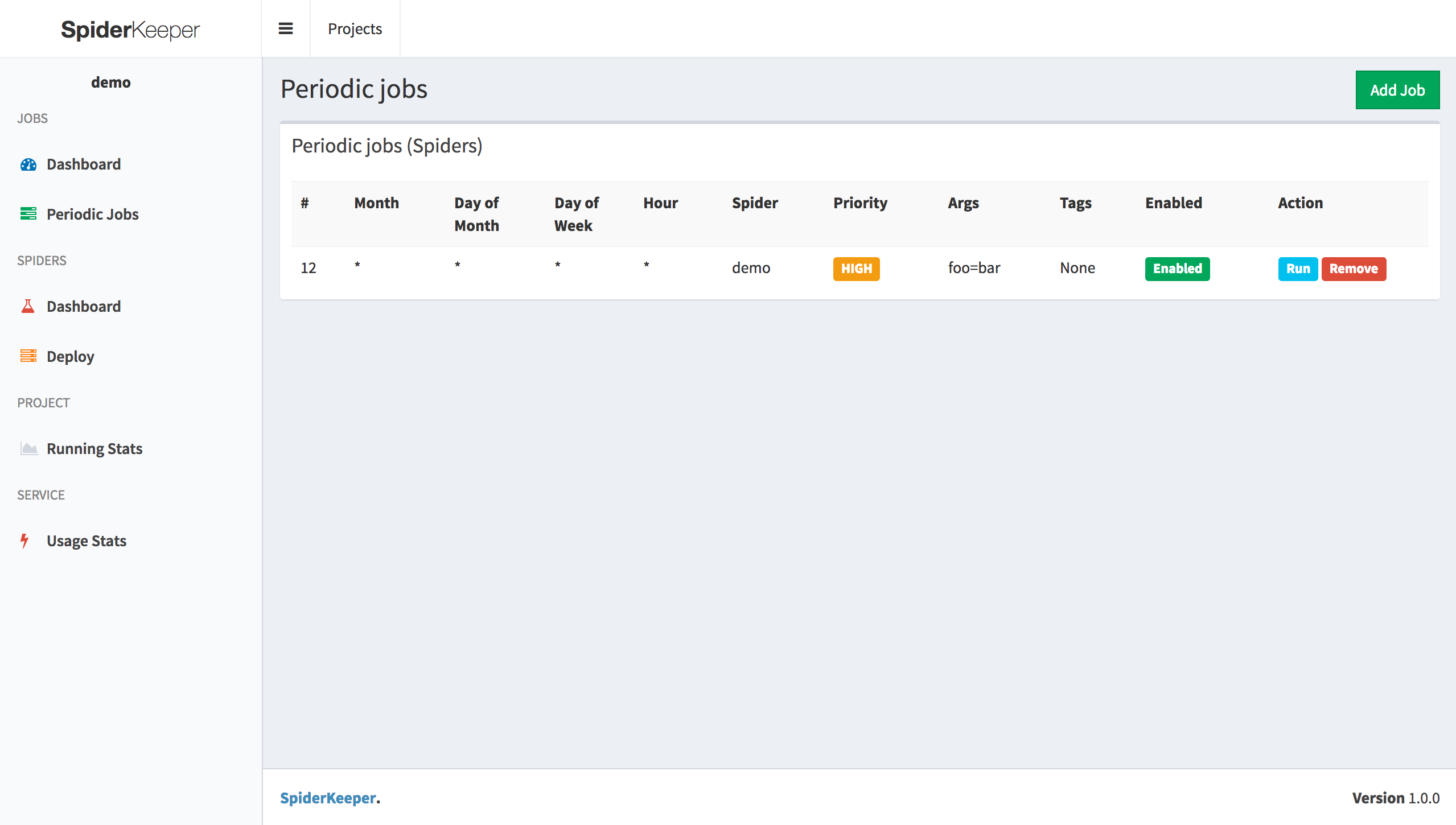

A scalable admin ui for spider service

## Features

- Manage your spiders from a dashboard. Schedule them to run automatically

- With a single click deploy the scrapy project

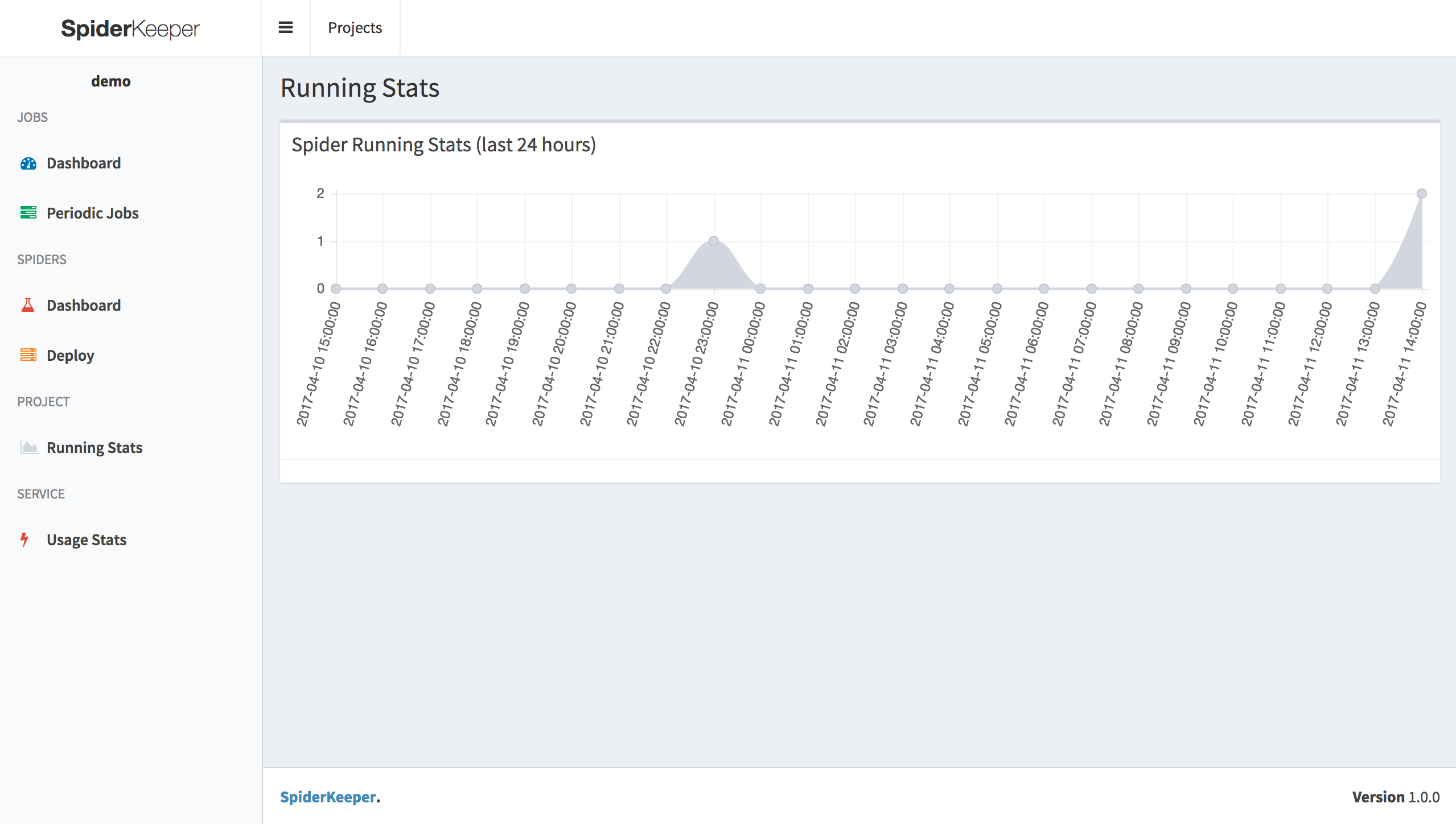

- Show spider running stats

- Provide api

Current Support spider service

- [Scrapy](https://github.com/scrapy/scrapy) ( with [scrapyd](https://github.com/scrapy/scrapyd))

## Screenshot

## Getting Started

### Installing

```

pip install spiderkeeper

```

### Deployment

```

spiderkeeper [options]

Options:

-h, --help show this help message and exit

--host=HOST host, default:0.0.0.0

--port=PORT port, default:5000

--username=USERNAME basic auth username ,default: admin

--password=PASSWORD basic auth password ,default: admin

--type=SERVER_TYPE access spider server type, default: scrapyd

--server=SERVERS servers, default: ['http://localhost:6800']

--database-url=DATABASE_URL

SpiderKeeper metadata database default: sqlite:////home/souche/SpiderKeeper.db

--no-auth disable basic auth

-v, --verbose log level

example:

spiderkeeper --server=http://localhost:6800

```

## Usage

```

Visit:

- web ui : http://localhost:5000

1. Create Project

2. Use [scrapyd-client](https://github.com/scrapy/scrapyd-client) to generate egg file

scrapyd-deploy --build-egg output.egg

2. upload egg file (make sure you started scrapyd server)

3. Done & Enjoy it

- api swagger: http://localhost:5000/api.html

```

## TODO

- [ ] Job dashboard support filter

- [x] User Authentication

- [ ] Collect & Show scrapy crawl stats

- [ ] Optimize load balancing

## Versioning

We use [SemVer](http://semver.org/) for versioning. For the versions available, see the [tags on this repository](https://github.com/DormyMo/SpiderKeeper/tags).

## Authors

- *Initial work* - [DormyMo](https://github.com/DormyMo)

See also the list of [contributors](https://github.com/DormyMo/SpiderKeeper/contributors) who participated in this project.

## License

This project is licensed under the MIT License - see the [LICENSE.md](LICENSE.md) file for details

## Contributing

Contributions are welcomed!

## 交流反馈

QQ群:

1群: 389688974(已满)

2群: 285668943

## 捐赠

近期下载者:

相关文件:

收藏者: